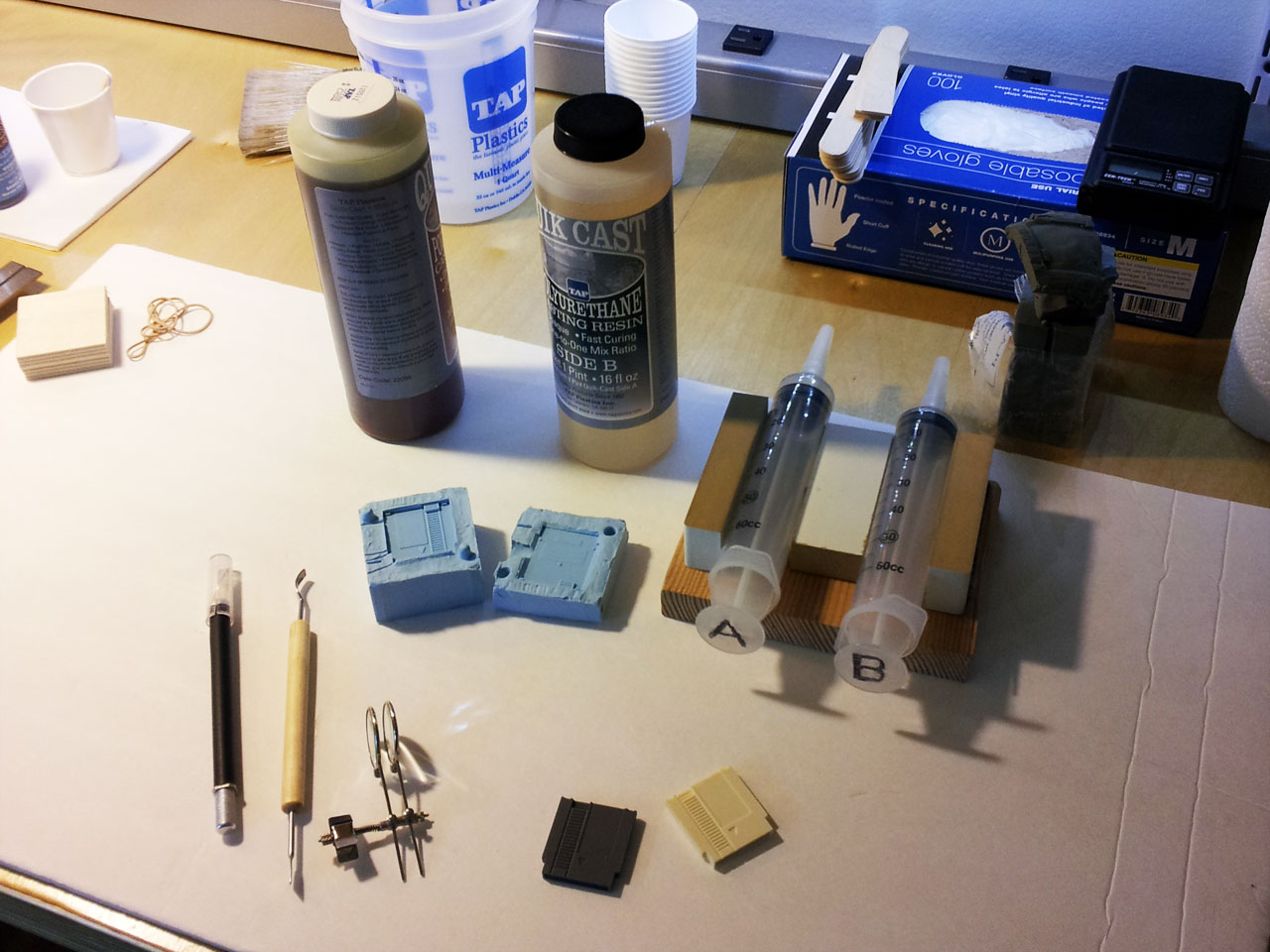

I've been using MadMapper for a while now, and it certainly saves a lot of time and effort compared to my home-brew solution that I'd been using before. One of the amazing things that MadMapper can do is what is known as "spatial scanning." The result of a scan usually looks something like this:

union pine (original)

It works by using a camera connected to your computer to capture the scene while projecting a series of black and white bands. The bands are captured by the camera, processed, and the result is a recreation of the scene as it appears from the perpective of the projector lens. You can then use the scanned scene as a background image in the MadMapper UI and simply line up all the projection shapes according to the image (rather than having to watch the physical projected scene while dragging points around).

While amazing, the spatial scanner often yields a very grainy image. For complex mappings this can be a little tricky to work with. After watching a tutorial video that illustrates a technique to fill in the missing pieces of the image using some photoshop filters, I decided to take a crack at making an automated tool to patch up the spatial scanner output.

In the end, I came up with two ways of processing the scan images, one is a photoshop macro, the other is a processing sketch. They both have some specific strengths and weaknesses, but so far the processing sketch tends to sharpen up the areas of the image that are most important to me when doing projection mapping: details and edges.

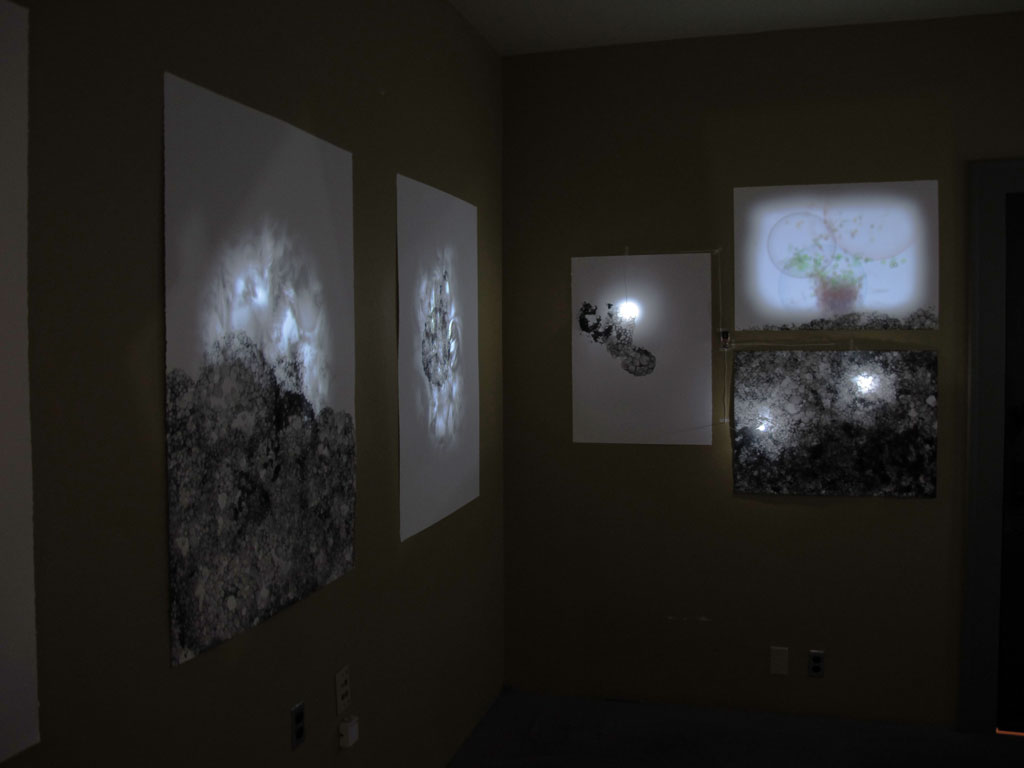

Here's a scan of stage at Union/Pine. Both photoshop and the processing sketch handle this one pretty well. Processing wins out on the details of the back wall.

Here's a scan of the stage at the Doug Fir, processing fills in more of the voids, but the borders are a little smoother with the photoshop filter.

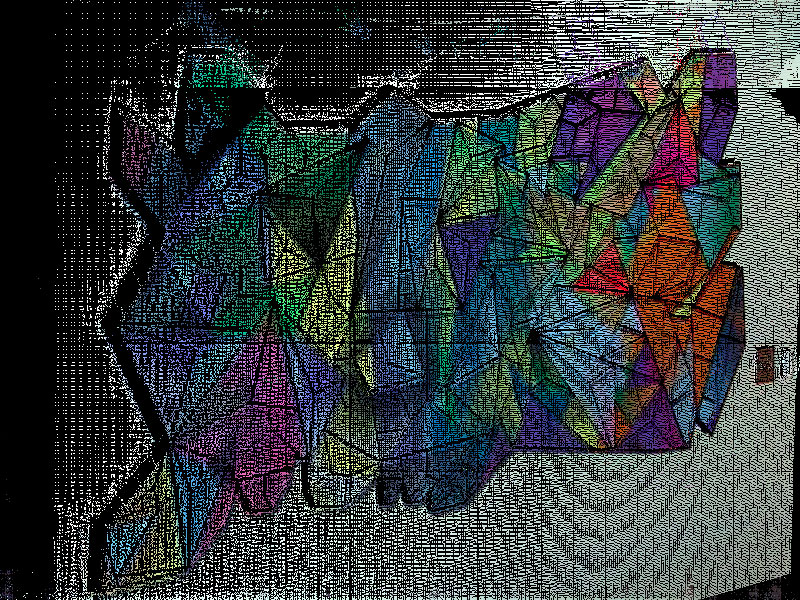

Still life, a gorillapod and a monome. The legs of the gorillapod are better defined with the photoshop filter, but the processing sketch yields better details in the monome buttons.

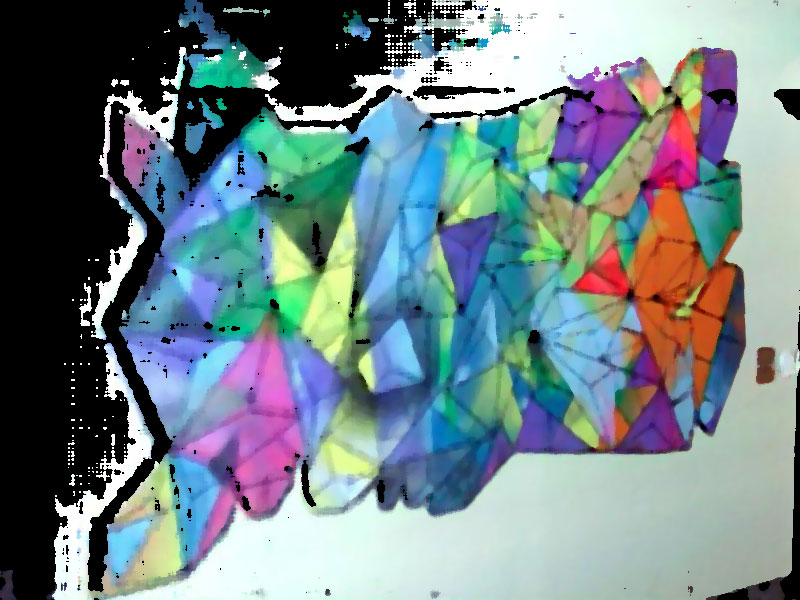

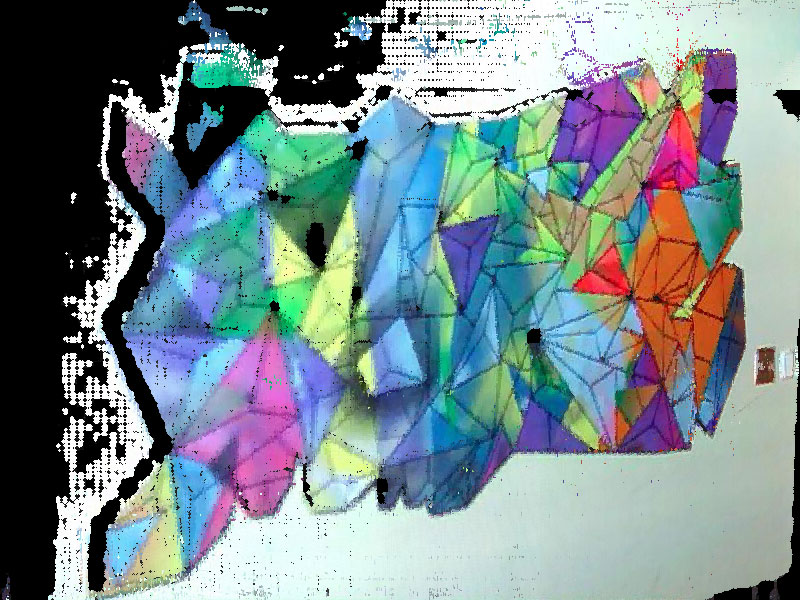

A painting. Processing sketch wins this one quite handily, the edge detail is much clearer between the colored polygons.

As I said, it's kind of a toss-up for these two tools. I'll keep using both and pick whichever produces the best result for the task at hand.

I'm still working on the processing sketch but will release it once I get it working as a stand-alone utility (it currently requires code tweaks for each usage).

If you have photoshop, you can download the macro here.